How To Make Your Iphone Alarm Louder

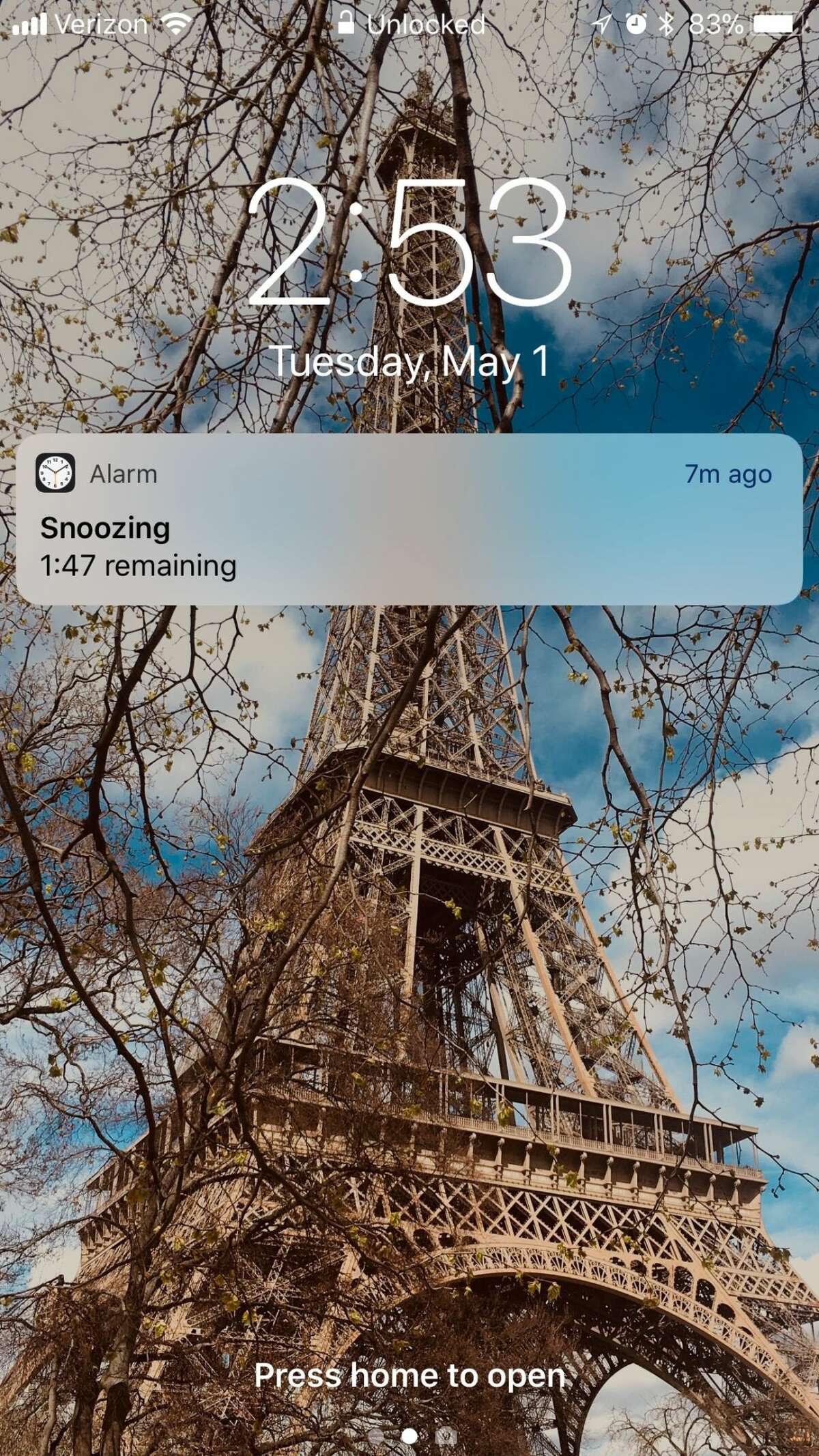

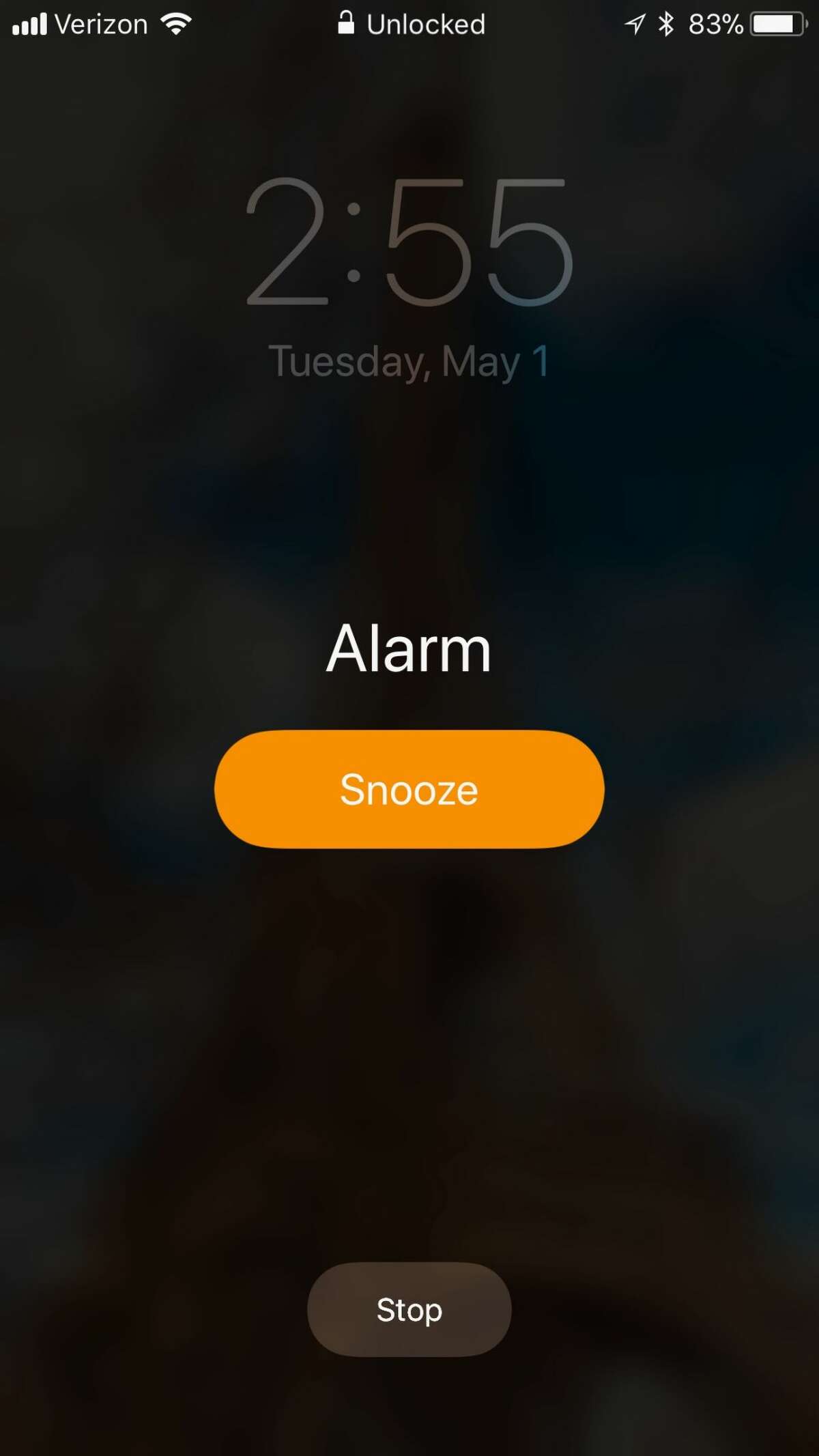

An apparent glitch causes the iPhone alarm to go off so quietly, you may not hear it. After 15 minutes, it snoozes automatically.

Screenshot

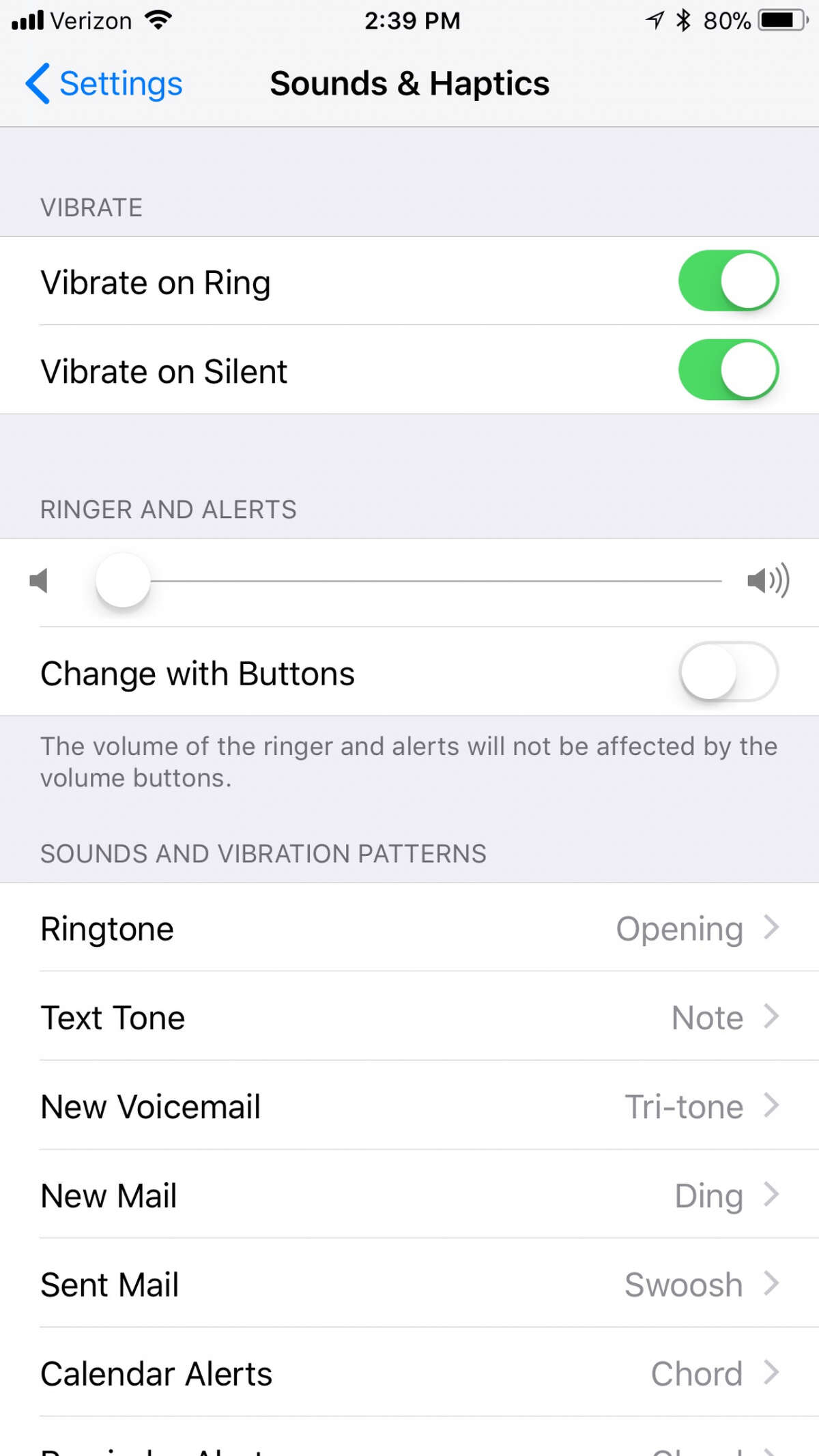

You can fix the problem by going to the devices settings, click "Sounds & Haptics" and adjusting the volume under "Ringer and Alerts." The volume may have reverted to being turned all the way down for no apparent reason.

Screenshot

An apparent glitch causes the iPhone alarm to go off so quietly, you may not hear it.

Screenshot

.

Damian Dovarganes /Associated

Creepy, real technologies that could be on the next 'Black Mirror'

Impossible meat substitutes

A Redwood City-based company named Impossible Burger had people waiting in lines outside upscale restaurants to eat it in 2017 with its meatless burger patty that looks and tastes like meat and even appears to bleed like it. The secret sauce is a genetically engineered molecule called soy leghemoglobin (heme for short), which comes from the root of soy plants. Heme is also found in meat.

The company has raised about $300 million in funding including the likes of Bill Gates, with a new plant in Oakland and plans to bring the burger to restaurants nationwide. It's pioneering a wave of food tech that could produce an array of meatless foods that taste like the real thing, reducing the need for animal farming that harms the environment.

(See next page for how it could go "Black Mirror" wrong)

Gabrielle Lurie / The Chronicle 2016

Impossible meat substitutes, cont'd

Where it could go "Black Mirror" wrong: Impossible Burger's success with the public has also brought it contempt from environmental groups. Because the burger's heme comes from a previously uneaten source – soy root – the FDA hasn't determined that it's either safe or unsafe to eat and is concerned it could be an allergen, despite the company's efforts to show it's safe. Unlike with drugs, food companies don't need FDA approval – companies don't even need to publicize whether they found all their ingredients to be safe.

One concerned environmental group told the Chronicle, "Currently our FDA, EPA and USDA regulations are falling behind the very quickly moving development of new technologies, and one of the ways that our regulatory agencies are falling behind is they are not assessing the process of genetically engineering these ingredients."

There's no evidence of anyone getting sick from the Impossible Burger. But Silicon Valley isn't exactly known for being patient with bringing products to market, and if the Impossible Burger leads to Impossible Chicken Wings and Impossible Pork Chops, one can see potentially dangerous laboratory shortcuts being made.

Gabrielle Lurie/The Chronicle

Cameras that know when you're smiling … or not smiling

Google's just-released first camera is noteworthy for several things, but the most striking feature has to be that it has no shutter button. Instead, the tiny Google Clips uses AI to take pictures and GIF-worthy video shorts on its own. The camera is especially trained to know things like when your dogs and cats are doing something cute, or when your baby is smiling. And it can learn which faces are important to you if you let it study your Google Photos library.

This can all seem alarmingly creepy, but the camera comes with a major safeguard: nothing goes to the cloud, and you have to manually decide to export a photo to your phone.

Where it could go "Black Mirror" wrong: This iteration of the Google Clips doesn't connect to the cloud, but what if the next version or a competitor's camera did with the promise of better picture quality or enhanced features? The appeal of letting an algorithm take optimized photos may be too much to pass up, bringing with it dystopian possibilities. Eventually, the AI could influence people's behavior in front of the camera, coercing smiles or waves – maybe even telling them how to dress to guarantee the most likes – to activate it. With no one to control the shutter, bystanders' privacy may be violated or embarrassing moments could be captured and broadcast. There are also the possibilities for surveillance abuse, if the camera discriminates on factors like skin color or gender. And if the boss is giving a presentation, smiles may be counted.

Washington Post photo by Jeffrey A. Fowler

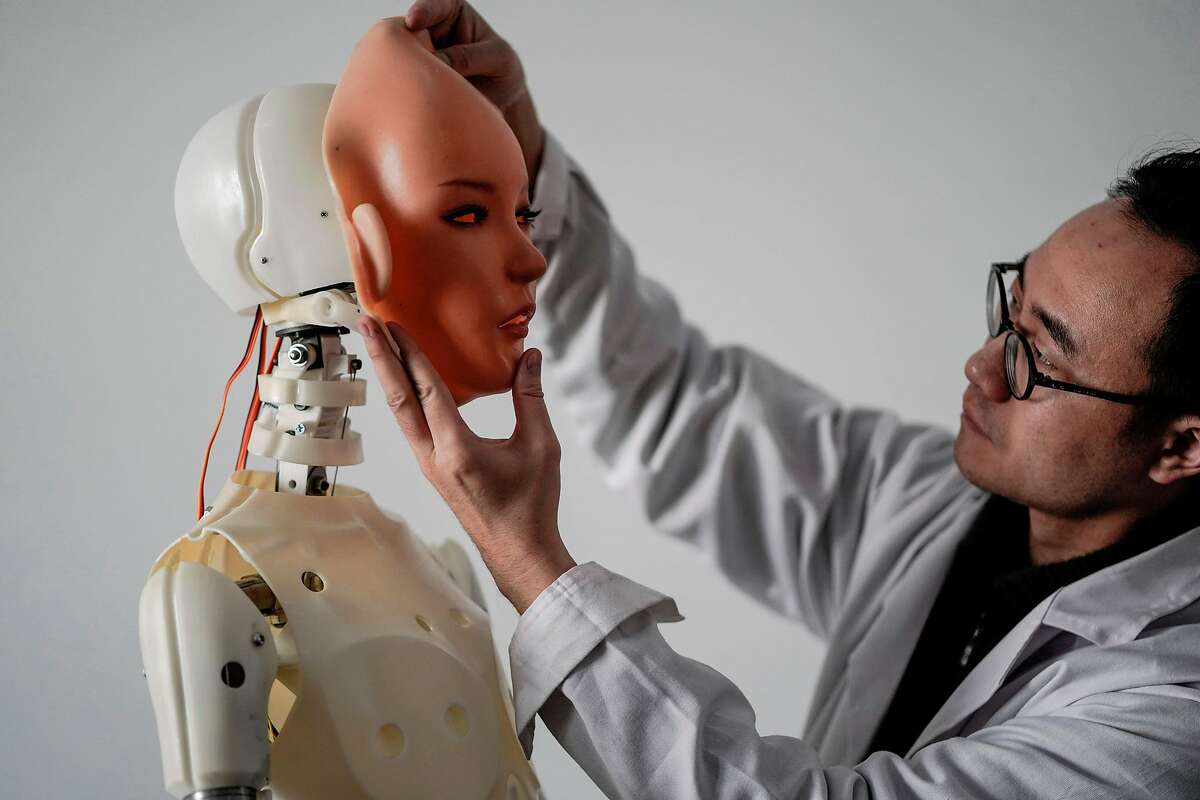

Relationship-killing, non-consenting sex robots

Sex robots are probably many years away from resembling the hyper-realistic ones of "Westworld." For one thing, they're not even close to being able to walk or hold a conversation. One of the most advanced prototypes was revealed by Abyss Creations at January's CES show in Las Vegas – that was basically a customizable robot face that would be planted on a porno-ready body. The head alone will cost $8,000 to $10,000.

The need for sexual companionship is enormous among many demographics. This includes the aging, people in countries such as China where female sex dolls are big sellers because men greatly outnumber women, and otherwise normal people who are going through depression or loneliness. Sex trafficking could decline as needs are met elsewhere. A sex doll even helped Ryan Gosling get his groove back once.

Where it could go "Black Mirror" wrong: As people gain more control over the look and behavior of sex robots, consequences could mount. Is it ethical to make a robot that looks like a celebrity? How about your ex, or someone who rejected you? Is it OK to program a robot to resist sex – in essence making robot rape legal, a la "Westworld," and would men be conditioned to ignore consent with real women?

Also, socially challenged men and boys would probably be the greatest adopters of sex robots, and a whole generation...

FRED DUFOUR/AFP/Getty Images

Hijacked internet-controlled sex toys

We are entering the age of teledildonics: remote-controlled sex using vibrators, vagina replicas, and more to simulate sex in real time. The potential applications are many: couples can use it to maintain intimacy over long distance, and porn models can show their customers what it feels like to have sex with them without ever touching. So far, the toys aren't very lifelike, but that's bound to change over time.

Where it could go "Black Mirror" wrong: We got a good look at the downside in February, when an Austrian company found various security flaws in an internet-controlled dildo made by Vibratissimo, called the "Panty Buster." The most disturbing flaw was that a hacker could easily guess the link required in the app to take control of the vibrator, without the toy owner's consent or knowledge. The company also found it easy to obtain account passwords and any photos the user had uploaded to the app.

Leah Millis/The Chronicle

AI-powered cameras that shock you into Instagram success

If only the character whose life unravels via social media in Black Mirror's "Nosedive" episode had consulted a German designer's current art project. Peter Buczkowski developed an AI-powered camera within a camera grip with an appropriately "Black Mirror" name: Prosthetic Photographer.

The device turns the human into a prosthetic more than anything, using electrical shocks to tell us when our camera lens is trained on a photo most likely to earn social-media likes – literally twitching our index finger into taking the photo. The device learned what makes a likeable photo by inputting a dataset of reactions to over 17,000 of them. You can find more technical information about his project on his website.

Buczkowski told SFGATE he didn't conceive of the project as an elaborate trolling of people's social-media obsessions, but reactions to it "actually made me think about portals like Instagram, creativity and why we enjoy certain things. I really enjoy the (response) describing an art degree as just learning the right rules. That's actually what my algorithm has done and what we do every day scrolling through fitness, Golden Gate Bridge and food images."

(See next page for more)

Peter Buczkowski

AI-powered cameras that shock you into Instagram success (cont'd)

Where it could go "Black Mirror" wrong: Buczkowski said the electrical shock's strength can be adjusted by the user and doesn't actually hurt, though people who tried it reported being scared of being hurt. Using AI-administered pain (or the fear of it) as a teaching or punishment device sounds like a nightmarish future scenario indeed.

Also, we've already seen proof that people are socially influenced to take the same Instagram photos while traveling, and a device that actually tells you which photos will get you the most likes seems almost an inevitability. That could have a profound effect on diluting photography, though Buczkowski said it may create new perspectives as well: "As I have experienced it, the computer finds patterns in the training set we as humans are not familiar with and are probably not enjoying (yet)."

Peter Buczkowski

Virtual human therapists

Academic and industry researchers are finding that people are more likely to share intimate personal details with a bot than with a human or via anonymous form. This includes a 2017 study of National Guard members returning from Afghanistan, conducted by USC's Institute for Creative Technologies.

That study used a bot that resembled a human therapist sitting in a chair (pictured above) and speaking calmly as a real therapist would. It performs eerily human-like functions such as establishing small talk to be build rapport with the patient, and follow-up questions based on changes in the patient's body language and facial expressions, using its own body-language adjustments. You can watch the therapy in action here.

The study found people to be more forthcoming about PTSD symptoms with the virtual therapist than they would on an anonymized form. A 2014 study by the same institute found that people were more likely to disclose mental-health issues with a virtual human when there was no "Wizard of Oz" real human overseeing it.

Mark Meadows, bot developer and CEO of Emeryville-based Botanic.io, told SFGATE his company found people were 80 percent more likely to trust a health-care bot than a human. "We're building a bot for a university where kids can talk to the bot and not feel embarrassed," said Meadows, whose company is working to authenticate bots worldwide to make AI more trustworthy.

(See next page for more)

Skip Rizzo/YouTube

Virtual human therapists, cont'd

Where it could go "Black Mirror" wrong: Skip Rizzo, part of the same USC institute that conducted those virtual-therapist studies, has written of the dangers in AI mixing with mental health. For one thing, some mental-health patients might be most vulnerable to bonding too much with their virtual therapists – especially as the bots get more sophisticated and lifelike.

"Are people going to form bonds with AI characters, 'Her' style?" Rizzo told SFGATE. "I don't look at that so much as a risk, but people more at risk are people that don't have a lot going on in their life anyway."

Rizzo wrote a separate paper including the ethical pitfalls of using AI data to create a numerical scale for "happiness." It's one thing for a virtual human to get people to share their unhappiness, but what if the bot starts diagnosing it incorrectly? "While this tendency is theoretically unavoidable, efforts should be invested in guaranteeing that it will not flatten the diversity of human experience," Rizzo wrote.

More terrifying, there's the chance the virtual therapist is hacked, exposing a person's sensitive health data and even being engineered to attack the patient by giving them bad advice. As this report including Stanford researchers points out (see page 24), "As AI develops further, convincing chatbots may elicit human trust by engaging people in longer dialogues, and perhaps eventually masquerade visually as another person in a video chat."

Warner Bros.

Designing your baby's genes with a Tinder-like app

We don't have to ask when we'll be able to alter the genes of human embryos, because it's already being done on non-viable ones in China. The Crispr-Cas9 gene-editing technique, which uses a natural enzyme to snip out and add genes like a Photoshop cut-and-paste tool, may never see widespread use, but experts do predict something similar will be used to create designer babies in our lifetimes – "It is unavoidably in our future," one bioethicist told The Guardian in 2017.

When that future comes, it's not hard to imagine a couple plugging their DNA into a smart device, inputting a set of desired traits and diseases to avoid, and then swiping a screen to their hearts' content until they find their perfect match.

Where it could go "Black Mirror" wrong: What if gene-editing gets so good at preventing diseases that couples who either don't want or can't afford the technique are shunned, or even prosecuted for it, the way parents might be today for not vaccinating their child? There's also the potential for racism and bias to eradicate normal genetic traits, like skin color or facial features. People's looks may become just as subject to trends as shoes.

Cecile Lavabre/Getty Images

Spotify algorithms turning musicians into digital slaves, cont'd

Where it can go "Black Mirror" wrong: While Spotify is tremendous at serving its listeners, it's taken great criticism for shortchanging its musicians. As one professor told Mashable, a songwriter on Spotify would need to elicit 4 million streams to make minimum wage for a month in California.

Musicians did get good news this year, when the U.S. Copyright Royalty Board ruled Spotify must pay them nearly 50 percent more for interactive streaming over the next five years. But with Spotify's algorithm playing a much larger role in what people listen to, there could be an ever-building pressure on musicians to give the company what it wants. That includes not just music, but music that will be approved by the algorithm. Maybe even demanded by the algorithm.

More than one music lover has written recently of Spotify reducing their favorite tunes to Muzak. As Greg Saunier of the indie band Deerhoof told one writer, "If you don't bow down to Spotify, you might as well tell whoever runs the guillotine that's above your neck to just let her rip. These streaming services are literally the only option for a music career nowadays."

"Black Mirror" has toyed with uploading consciousness to the cloud several times, and one can imagine a band either virtually or literally...

Scott Strazzante / The Chronicle

AI-assisted fake porn

It takes no money or computer science training right now to use machine-learning technology that swaps a person's face to the body of a porn actor to create a believable video. A Reddit user created a free tool dedicated to that purpose called FakeApp, using an algorithm devised by another Redditor pursuing fake porn.

Of course, there are other uses for this kind of technology, and big-budget movies such as "Rogue One" and "Guardians of the Galaxy" are already using it to bring actors back from the dead or make them look younger.

Where it could go "Black Mirror" wrong: The potential for even harsher and more embarrassing versions of revenge porn, blackmail, and fake news are as infinite as the technology. Even if we know the videos are faked, they can still be used to harass and embarrass women on social media in ways Twitter hasn't seen yet. And much of the technology is already here.

A January report by Vice found convincing Reddit videos swapping the faces of such celebrities as Daisy Ridley (pictured above in "The Last Jedi") and Jessica Alba onto the bodies of porn actresses, and found users passing off fake nude celebrity videos as real. One expert told the site, "What is new is the fact that it's now available to everybody, or will be ... It's destabilizing. The whole business of trust and reliability is undermined by this stuff."

Lucasfilm Ltd.

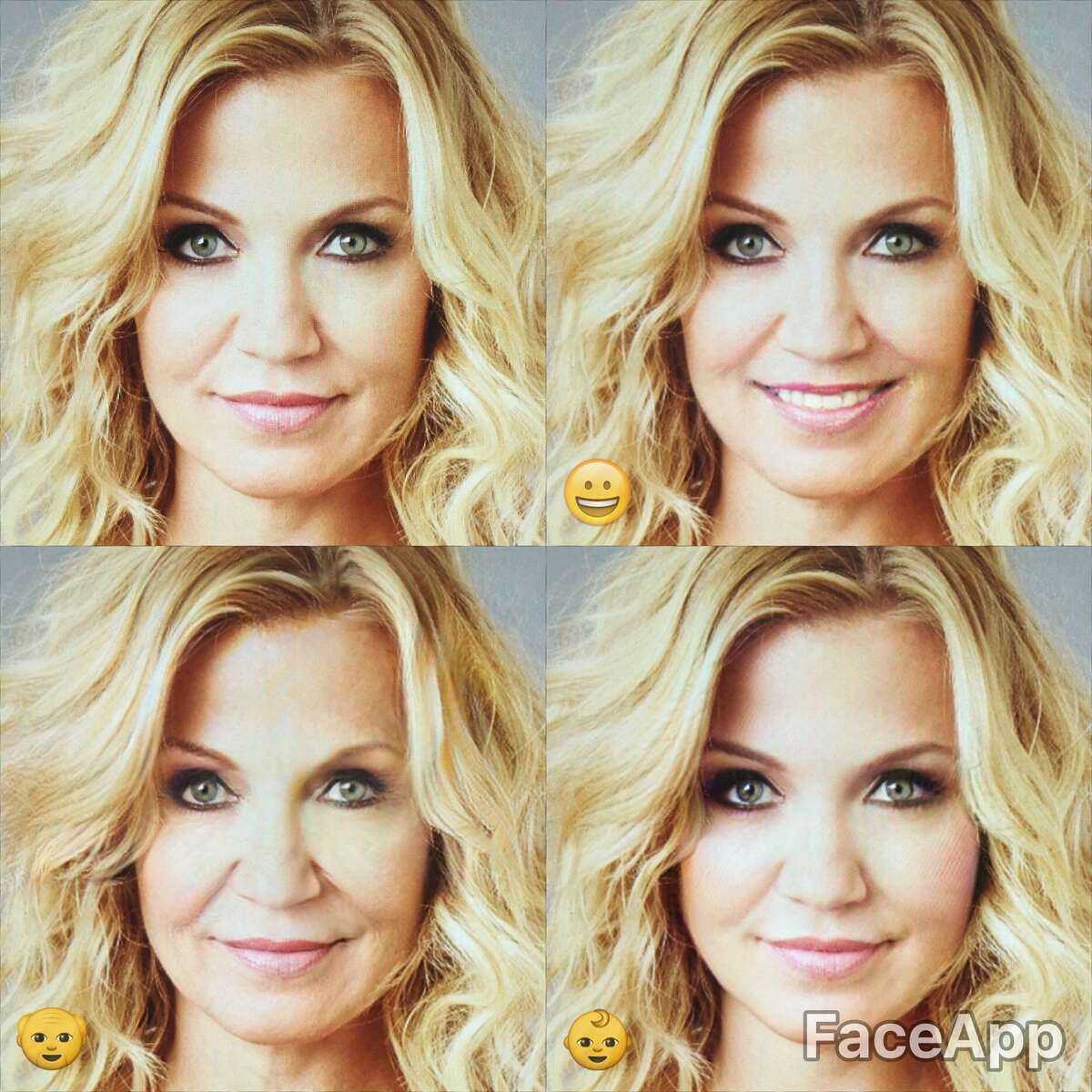

Gender swapping and other facial-recognition apps

The most recent Facebook fad has involved uploading your photo to FaceApp and showing your friends how you'd look as a different gender. This and other apps use facial-recognition technology that may be fun to use, but also possibly unsafe.

Where it could go "Black Mirror" wrong: The FaceApp terms of service allow the Russian company to "access, collect, monitor, store on your device, and/or remotely store one or more 'device identifiers,'" including face ID. The company can also share that information with third-party companies. If face ID is the password standard of the future – and the future is already here with the iPhone X – there's a chance of popular social-media apps being used to steal millions of people's identities.

"This particular app doesn't add much extra danger," Michael Bradley of the law firm Marque Partners told ABC in Australia. "However, consenting to those uses for commercial purposes is an additional step which has no upside for humans."

FaceApp

Self-driving vehicles

Autonomous vehicles are already chauffeuring people on test runs – with human supervision. As of December, Uber reported completing a combined 50,000 self-driving car rides in Pittsburgh and Phoenix. Waymo logged 352,545 self-driving miles on California roads in 2017, and GM reported over 131,000 miles.

Equipped with an array of cameras, sensors, and AI that learns from its own driving as well as those of other cars reporting data to the cloud, the self-driving vehicle is hailed by its creators as the safer way to travel. Taxis, long-haul trucks, and school buses could all be autonomous a decade from now.

(See next page for more)

Associated Press

Self-driving cars, cont'd

Where it could go "Black Mirror" wrong: What if those robot vehicles become giant missiles? A recent report on the dangers of AI, written by 26 people representing Stanford, Oxford, and Cambridge universities and the Elon Musk-funded OpenAI, warns of the vulnerabilities in deploying networks of self-driving cars.

The report says that by subtly changing a stop sign image by a few pixels, a self-driving car could be confused into crashing. This kind of danger only compounds if many cars are controlled by a single AI system: "A worst-case scenario in this category might be an attack on a server used to direct autonomous weapon systems, which could lead to large-scale friendly fire or civilian targeting."

Others are warning of a more practical danger: what happens when thousands of Uber drivers, taxi drivers, and truck drivers are forced out of work. Fringe Democratic presidential candidate Andrew Yang recently told the New York Times, "All you need is self-driving cars to destabilize society ... That one innovation will be enough to create riots in the street."

For the moment, self-driving cars without human supervisions are still a long a way from replacing human drivers: Bryan Salesky, CEO of self-driving startup Argo AI, wrote in a blog post, "Those who think fully self-driving vehicles will be ubiquitous on city streets months from now or even in a few years are not well connected to the...

Eric Risberg/Associated Press

Senior care robots

With America's aging population escalating by the year – one in four Americans are expected to be 65 or older by 2060 – robot caretakers are already getting experimental use. Honda's ASIMO server can walk up stairs and serve drinks, and the newly released ElliQ system combines an AI-powered robot with a tablet do everything from reminding seniors to take medication to recommending activities based on what it's learned about the user. Eventually, a home could be equipped with robots that bathe, clothe, and talk to seniors – replacing the need for a human caregiver.

Where it could go "Black Mirror" wrong:Where it could go "Black Mirror" wrong: There's plenty enough that can go right to make senior-care robots a service worth having. But one nightmare scenario could involve a robot malfunctioning while bathing or carrying a senior. Also, as this AI report including Stanford and other researchers points out regarding Internet of Things devices (see page 40), "The diffusion of robots to a large number of human-occupied spaces makes them potentially vulnerable to remote manipulation for physical harm, as with, for example, a service robot hacked from afar to carry out an attack indoors."

To prevent such robot-on-senior attacks, a likely safeguard will be to...

Being scammed by your own Facebook profile

The Buzzfeed reporter who talked to security experts about "laser phishing" said they were so concerned about hackers creating tools to enable it that they wouldn't reveal much about the technology.

We already know to watch out for phishing, where a phony email is meant to look real with a malicious link that invites the mark to reveal personal information. Laser phishing would make this much tougher to detect: because its AI combs through our social media profiles and beyond to craft phony emails from a friend or family member that are so realistic that we may even be expecting them.

Where it could go "Black Mirror" wrong: In myriad ways, but the worst part may be that it could create believable digital versions of us that get us into legal trouble, fired from jobs, or ostracized from family.

Damian Dovarganes /Associated Press

Employee-tracking data sensors

Sensors and trackers already exist – clothing maker L.L. Bean announced in February that it would be installing sensors into some of its coats and boots to track how customers use them. And the fitness app Strava recently found itself in hot water when security analysts found it could be used to reveal military bases and personnel.

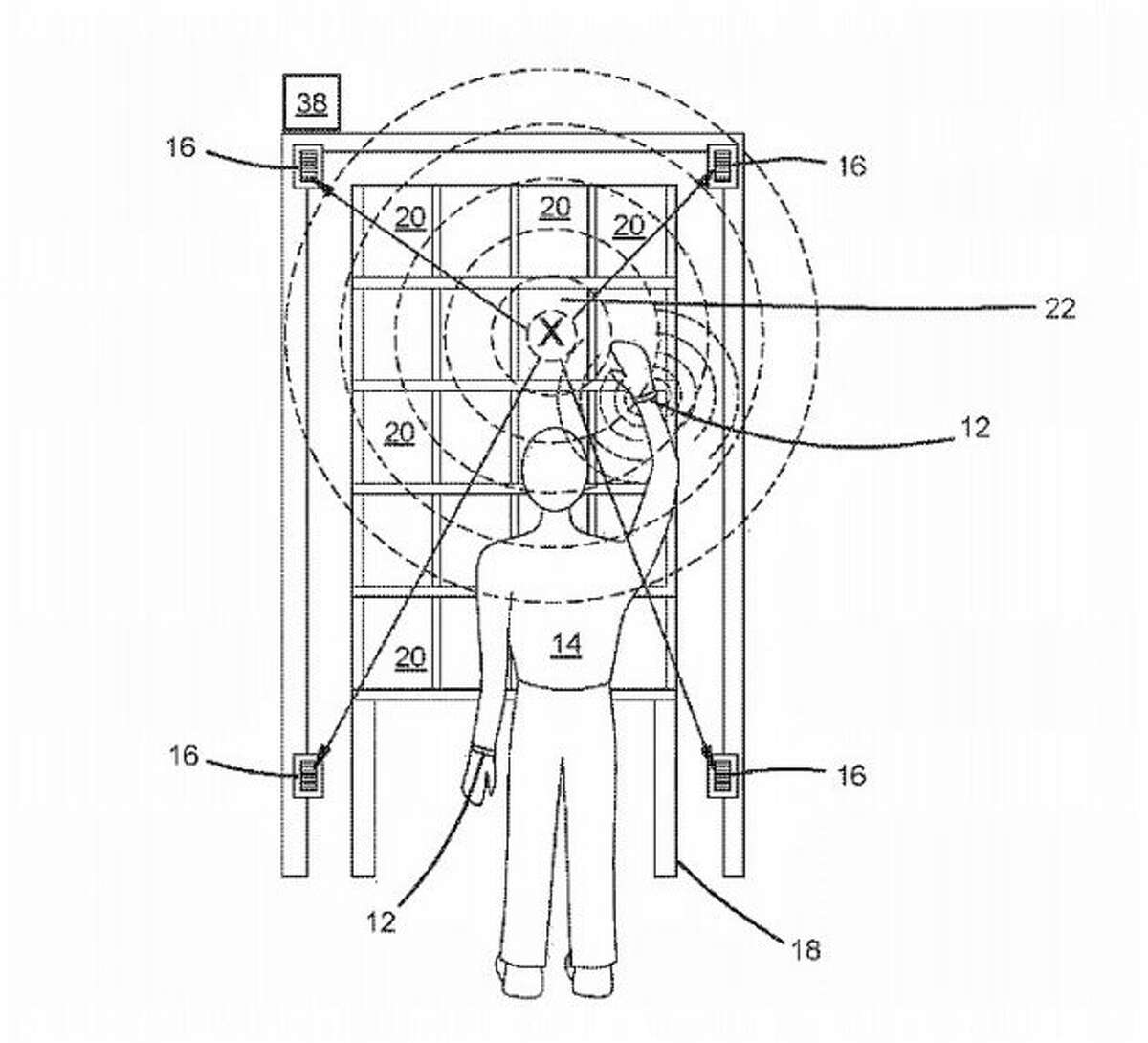

Amazon in January was granted two patents (pictured above) for a radio-frequency wristband system its warehouse workers could wear that would buzz to let them know when they're reaching for the right places.

Where it could go "Black Mirror" wrong: The Amazon patents, with terms such as "haptic feedback," inspired some predictable "Black Mirror" comparisons. The company called the speculation "misguided," and there's no evidence of any nefarious intent here – even if the company is known for limiting workers' restroom breaks. The company may never actually create the wristbands, but concern for the abuse of similar snooping technology is bound to be with us for decades to come. A former Amazon warehouse worker told the New York Times the wristbands were "stalkerish," and that the company already used similar tracking technology on its employees.

USPTO/AmazonAn apparent iPhone glitch — that occurs without any warning — can cause your alarm to go off so quietly, you may not even hear it.

I learned about this issue the hard way, when I nearly missed a 7 a.m. flight because my alarm didn't go off. More accurately, it had gone off, but I hadn't heard it. And if an alarm goes off and no one is around to hear it, does it make a sound?

After jumping out of bed at 5:34 a.m. and getting ready at lightning speed, I did some Google searching from the backseat of a Lyft to the airport. I found others had the same problem. More than 1,200 people said their alarms were also ringing at an undetectably low volume, even though the phone's volume was set to high.

ALSO READ: Apple earnings show iPhone X didn't live up to hype

The change seems to happen without any warning. One morning your alarm will go off like normal, the next you may not be able to hear it. And it's not based on any other volume changes you do on your phone.

Some users on the Apple help forum blamed the problem on an update to iOS 11, but we couldn't reach Apple for official comment.

The alarm will ring quietly for about 15 minutes before snoozing, then ringing again. Unless you have supersonic hearing, chances are you won't hear it and you may be late for work (or a flight!).

The good news is there's an easy fix. If this happens to you, go to "Settings" on your iPhone or iPad, then click on "Sounds & Haptics." You'll see the sliding scale for volume under "Ringer and Alerts" is set to the lowest possible option. Using your finger on the touchscreen, slide the marker on the scale to the right to increase the volume of alerts.

You can also determine whether you want the volume of the ringer and alerts to be controlled by the volume buttons on the side of the device.

ALSO READ: 17 common products that cost way more than they should

SFGATE could not immediately reach Apple for comment on the apparent glitch.

Read Alix Martichoux's latest stories and send her news tips at amartichoux@sfchronicle.com .

How To Make Your Iphone Alarm Louder

Source: https://www.sfgate.com/tech/article/apple-ios-alarm-volume-not-working-quiet-loud-ring-12875840.php

Posted by: valeropamentier.blogspot.com

0 Response to "How To Make Your Iphone Alarm Louder"

Post a Comment